List different types of Cloud Services.

Different types of Cloud Services are:

- Software as a Service (SaaS) – It is a software distribution model in which services are hosted by a cloud service provider. Eg- salesforce, dropbox

- Data as a Service (DaaS) – DaaS is a data management strategy that uses the cloud to deliver data storage, integration, processing, and/or analytics services via a network connection.

- Platform as a Service (PaaS)- It provides a runtime environment. It allows programmers to easily create, test, run, and deploy web applications. Eg- aws elastic beanstalk,

- Infrastructure as a Service (IaaS) – It is a type of cloud computing service that offers essential computing, storage, and networking resources on demand, on a pay-as-you-go basis. Eg- AWS-ec2.

Mention what are the key components of AWS.

The key components of AWS are:

– Elastic Compute Cloud (EC2): It provides on-demand computing resources for hosting applications. It is handy in case of unpredictable workloads

– Simple Storage Device or (S3): It is a storage device and the most widely used AWS service

– Route 53: A DNS web service

– Amazon RDS – Relational Database Services makes database configuration, management, and scaling easy in the cloud. RDS is available on various database instances like – Amazon Aurora, PostgreSQL, MySQL, MariaDB, and Oracle. database, and SQL server. By leveraging the AWS Database Migration Service, you can easily migrate or reproduce your existing databases to Amazon RDS.

– Identity and Access Management: It provides enhanced security and identity management for your AWS account.

– Amazon VPC: Virtual Private Cloud enables you setting up a reasonably isolated section of the AWS Cloud where you can deploy AWS resources at scale in a virtual environment. VPC gives you total control over your environment, which includes the option to choose your IP address range, creation of subsets, and arrangement of route tables and network access points.

– AWS Lambda: It is a serverless and event-driven computing service. It allows running codes virtually for applications without any provisioning or managing servers.

– Amazon CloudFront: CloudFront is a content delivery network platform that executes at rapid rates with the secure distribution of data, videos, apps, and APIs on a global scale with low delay times.

For what purpose do you use AWS in your organization?

- Our company’s product is hosted on AWS, It’s a 3-tier web application deployed using services like – -ec2, route53, s3, RDS, IAM, and VPC.

- We are also using AWS to deploy various DevOps tools, like – GIT, Jenkins, sonarqube, and Artifactory.

- We are also performing POC to containerize our product. Products and shared services are being containerized and for this, we are using services like – ECR, EKS, and ECS.

What is the relation between the Availability Zone and Region?

AWS regions are separate geographical areas, like the US-West 1 (North California) and Asia South (Mumbai). On the other hand, availability zones are the areas that are present inside the regions. These are generally isolated zones that can replicate themselves whenever required.

What tools and techniques can you use in AWS to identify if you are paying more than you should be, and how to correct it?

You can know that you are paying the correct amount for the resources that you are using by employing the following resources:

Check the Top Services Table: It is a dashboard in the cost management console that shows you the top five most used services. This will let you know how much money you are spending on the resources in question.

Cost Explorer: There are cost explorer services available that will help you to view and analyze your usage costs for the last 13 months. You can also get a cost forecast for the upcoming three months.

AWS Budgets: This allows you to plan a budget for the services. Also, it will enable you to check if the current plan meets your budget and the details of how you use the services.

Cost Allocation Tags: This helps in identifying the resource that has cost more in a particular month. It lets you organize your resources and cost allocation tags to keep track of your AWS costs.

What are the native AWS Security logging capabilities?

Most of the AWS services have their logging options. Also, some of them have account-level logging, like in AWS CloudTrail, AWS Config, and others. Let’s look at two services in specific:

AWS CloudTrail: This is a service that provides a history of the AWS API calls for every account. It lets you perform security analysis, resource change tracking, and compliance auditing of your AWS environment as well. The best part about this service is that it enables you to configure it to send notifications via AWS SNS when new logs are delivered.

AWS Config: This helps you understand the configuration changes that happen in your environment. This service provides an AWS inventory that includes configuration history, configuration change notifications, and relationships between AWS resources. It can also be configured to send information via AWS SNS when new logs are delivered.

What is a DDoS attack, and what services can minimize them?

DDoS is a cyber-attack in which the perpetrator accesses a website and creates multiple sessions so that other legitimate users cannot access the service. The native tools that can help you deny the DDoS attacks on your AWS services are:

- AWS Shield

- AWS WAF

- Amazon Route53

- Amazon CloudFront

- ELB

- VPC

AWS Shield is the service that protects against DDoS (Distributed Denial of Service) attacks on AWS applications. There are two types of AWS Shields: AWS Shield Standard and AWS Shield Advanced. AWS Shield Standard supports protecting applications from common and frequently occurring DDoS attacks. At the same time, AWS Shield advanced offers a higher level of protection for the applications running on Amazon EC2, ELB, Amazon CloudFront, AWS Global Accelerator, and Route 53.

You are trying to provide a service in a particular region, but you do not see the service in that region. Why is this happening, and how do you fix it?

Not all Amazon AWS services are available in all regions. When Amazon initially launches a new service, it doesn’t get immediately published in all the regions. They start small and then slowly expand to other regions. So, if you don’t see a specific service in your region, chances are the service hasn’t been published in your region yet. However, if you want to get a service that is not available, you can switch to the nearest region that provides the service.

Name some of the AWS services that are not region-specific

AWS services that are not region-specific are:

IAM

Route 53

Web Application Firewall

CloudFront

What is Serverless Computing?

AWS offers a serverless computing facility to run codes and manage data and applications without managing servers. Serverless computing eliminates infrastructure management tasks like capacity provisioning, patching, etc. It reduces operating costs significantly. As this technology scales in response to the demands for resources automatically, it ensures quick service to users.

We can deploy application on AWS using serverless technology, using below services:

frontend – S3, Route53 CloudFront

backend – API Gateway, Lambda, SNS, SQS, SES

database – Aurora MySQL

What is AWS Lambda?

It is a serverless and event-driven computing service. It allows running codes virtually for applications without any provisioning or managing servers. Most AWS services and SaaS applications can trigger AWS Lambda. This service can execute any code volume due to its scaling properties. Also, decoupled services can be communicated through the event-driven functions of AWS Lambda.

What is CloudWatch?

CloudWatch helps you to monitor AWS environments like EC2, RDS Instances, and CPU utilization. It also triggers alarms depending on various metrics.

What is Elastic Transcoder?

Elastic Transcoder is an AWS Service Tool that helps you in changing a video’s format and resolution to support various devices like tablets, smartphones, and laptops of different resolutions

What Are the Benefits of AWS’s Disaster Recovery?

Businesses use cloud computing in part to enable faster disaster recovery of critical IT systems without the cost of a second physical site. The AWS cloud supports many popular disaster recovery architectures ranging from small customer workload data center failures to environments that enable rapid failover at scale. With data centers all over the world, AWS provides a set of cloud-based disaster recovery services that enable rapid recovery of your IT infrastructure and data.

What are the factors to consider while migrating to Amazon Web Services?

Here are the factors to consider during AWS migration:

- Operational Costs – These include the cost of infrastructure, ability to match demand and supply, transparency, and others.

- Workforce Productivity

- Cost avoidance

- Operational resilience

- Business agility

How can you use AWS WAF in monitoring your AWS applications?

AWS WAF or AWS Web Application Firewall protects your web applications from web exploitations. It helps you control the traffic flow to your applications. With WAF, you can also create custom rules that block common attack patterns. It can be used for three cases: allow all requests, prevent all requests, and count all requests for a new policy.

What is a Stateful and a Stateless Firewall?

A Stateful Firewall is one that maintains the state of the rules defined. It requires you to define only inbound rules. Based on the inbound rules defined, it automatically allows the outbound traffic to flow.

On the other hand, a Stateless Firewall requires you to explicitly define rules for inbound as well as outbound traffic.

For example, if you allow inbound traffic from Port 80, a Stateful Firewall will allow outbound traffic to Port 80, but a Stateless Firewall will not do so.

What are the advantages of auto-scaling?

The following are the advantages of autoscaling

- Offers fault tolerance

- Better availability

- Better cost management

What is SQS?

Simple Queue Service is also known as SQS. It is distributed queuing service which acts as a mediator for two controllers.

Amazon Simple Queuing Service (SQS) is a fully managed message queuing service. Using this service, you can send, receive and store any quantity of messages between the applications. This service helps to reduce complexity and eliminate administrative overhead. In addition to that, it provides high protection to messages through the encryption method and delivers them to destinations without losing any message.

What are the two types of queues in SQS?

There are two types of queues known

Standard Queues: It is a default queue type. It provides an unlimited number of transactions per second and at least one message delivery option.

FIFO Queues: FIFO queues are designed to ensure that the order of messages is received and sent is strictly preserved as in the exact order that they sent.

DNS and Load Balancer service comes under which type of cloud service?

DNS and Load Balancer and DNS services come under IAAS-storage cloud service.

What is the role of AWS CloudTrail?

CloudTrail is a specially designed tool for logging and tracking API calls. It helps to audit all S3 bucket accesses.

Name the AWS service that exists only to redundantly cache data and images.

AWS Edge locations are services that redundantly cache data and images.

What is Amazon EMR?

EMR is a survived cluster stage that helps you to interpret the working of data structures before the intimation. Apache Hadoop and Apache Spark on Amazon Web Services help you to investigate a large amount of data. You can prepare data for the analytics goals and marketing intellect workloads using Apache Hive and using other relevant open-source designs.

Mention a few AWS-related commands

- Aws command to create an instance :

aws ec2 run-instances –image-id ami-xxxxxxxx –count 1 –instance-type t2.micro –key-name MyKeyPair –security-group-ids sg-903004f8 –subnet-id subnet-6e7f829e

- aws command to create tags :

$ aws ec2 create-tags –resources i-5203422c –tags Key=Name,Value=MyInstance

- Command to list any instances that have the tag Name=MyInstance.

$ aws ec2 describe-instances –filters “Name=tag:Name,Values=MyInstance”

- Terminating an instance :

aws ec2 terminate-instances –instance-ids i-5203422c

- To start an instance :

aws ec2 start-instances –instance-ids i-1234567890abcdef0

- To stop an instance:

aws ec2 stop-instances –instance-ids i-1234567890abcdef0

- AWS command to create a new bucket :

aws s3api create-bucket –bucket my-bucket –region us-east-1

- AWS command to create snapshot :

aws ec2 create-snapshot –volume-id vol-1234567890abcdef0 –description “This is my root volume snapshot”

What is AWS Elastic Beanstalk?

This AWS service helps deploy and manage applications in the cloud quickly and easily. Here, developers need to upload the codes; after that, Elastic Beanstalk will manage other requirements automatically. Simply put, Elastic Beanstalk manages right from capacity provisioning, auto-scaling, load balancing up to application health monitoring.

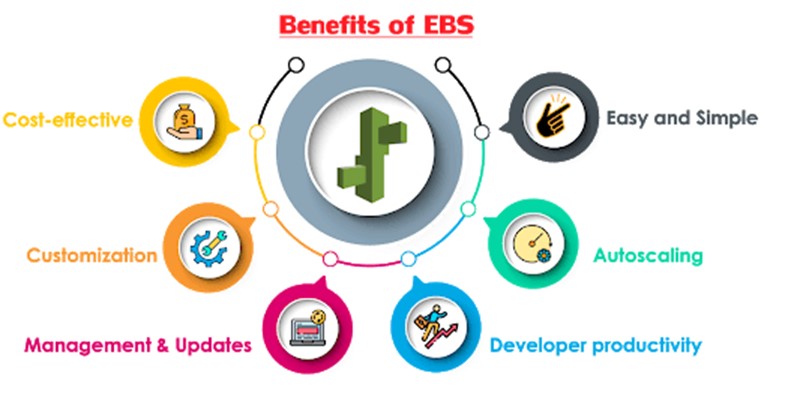

What are the benefits of AWS Elastic Beanstalk?

- In a way, it is faster and simpler to deploy applications

- The auto-scaling facility of Elastic Beanstalk supports to scale applications up and down based on the demands.

- This AWS service manages application platforms by updating with the latest patches and updates.

- When they use this service, developers could achieve enough freedom to choose the type of EC2 instance, processors, etc.

Following are the few benefits of the Elastic Beanstalk:

- Easy and simple: Elastic Beanstalk enables you to manage and deploy the application easily and quickly.

- Autoscaling: Beanstalk scales up or down automatically when your application traffic increases or decreases.

- Developer productivity: Developers can easily deploy the application without any knowledge, but they need to maintain the application securely and be user-friendly.

- Cost-effective: No charge for Beanstalk. Charges are applied for the AWS service resources which you are using for your application.

- Customization: Elastic Beanstalk allows users to select the configurations of AWS services that users want to use for application development.

- Management and updates: It updates the application automatically when it changes the platform. Platform updates and infrastructure management are taken care of by AWS professionals.

What is Amazon Kinesis?

This AWS service collects, processes, and analyses real-time streaming data and generates useful insights. Here, the real-time data will be video, audio, application logs, IoT telemetry data, and website clickstreams. And you can take the right actions at the right time based on these insights. Especially, data is processed and analyzed once received rather than waiting for the arrival of the whole data.

How do Amazon Kinesis data streams function?

Amazon Kinesis captures data from AWS services, microservices, Logs, and mobile apps and sensors, which can be of any quantity. Then, it easily streams the data to AWS Lambda, Amazon kinesis data analytics, and data firehose. And Amazon kinesis builds data streaming applications using the mentioned AWS services, open-source framework, and custom applications.

What is the similarity between Terraform and AWS CloudFormation?

Terraform and CloudFormation are both infrastructure-as-code (IaC) tools. CloudFormation is developed by AWS and only manages AWS resources. Terraform is developed by HashiCorp and can manage resources across a wide range of cloud vendors.

What is AWS CloudFormation?

AWS CloudFormation is a service that helps you model and set up your AWS resources so that you can spend less time managing those resources and more time focusing on your applications that run in AWS. You create a template that describes all the AWS resources that you want (like Amazon EC2 instances or Amazon RDS DB instances), and CloudFormation takes care of provisioning and configuring those resources for you. You don’t need to individually create and configure AWS resources and figure out what’s dependent on what; CloudFormation handles that. The following scenarios demonstrate how CloudFormation can help.

Simplify infrastructure management

For a scalable web application that also includes a backend database, you might use an Auto Scaling group, an Elastic Load Balancing load balancer, and an Amazon Relational Database Service database instance. You might use each individual service to provision these resources and after you create the resources, you would have to configure them to work together. All these tasks can add complexity and time before you even get your application up and running.

Instead, you can create a CloudFormation template or modify an existing one. A template describes all your resources and their properties. When you use that template to create a CloudFormation stack, CloudFormation provisions the Auto Scaling group, load balancer, and database for you. After the stack has been successfully created, your AWS resources are up and running. You can delete the stack just as easily, which deletes all the resources in the stack. By using CloudFormation, you easily manage a collection of resources as a single unit.

Quickly replicate your infrastructure

If your application requires additional availability, you might replicate it in multiple regions so that if one region becomes unavailable, your users can still use your application in other regions. The challenge in replicating your application is that it also requires you to replicate your resources. Not only do you need to record all the resources that your application requires, but you must also provision and configure those resources in each region.

Reuse your CloudFormation template to create your resources in a consistent and repeatable manner. To reuse your template, describe your resources once and then provision the same resources over and over in multiple regions.

What are the elements of an AWS CloudFormation template?

AWS CloudFormation templates are YAML or JSON formatted text files that are comprised of five essential elements, they are:

- Template parameters

- Output values

- Data tables

- Resources

- File format version

How does AWS config work with AWS CloudTrail?

AWS CloudTrail records user API activity on your account and allows you to access information about the activity. Using CloudTrail, you can get full details about API actions such as the identity of the caller, time of the call, request parameters, and response elements. On the other hand, AWS Config records point-in-time configuration details for your AWS resources as Configuration Items (CIs).

You can use a CI to ascertain what your AWS resource looks like at any given point in time. Whereas, by using CloudTrail, you can quickly answer who made an API call to modify the resource. You can also use Cloud Trail to detect if a security group was incorrectly configured.

Can AWS Config aggregate data across different AWS accounts?

Yes, you can set up AWS Config to deliver configuration updates from different accounts to one S3 bucket, once the appropriate IAM policies are applied to the S3 bucket.

What is CloudFront?

Amazon CloudFront is a web service that speeds up the distribution of your static and dynamic web content, such as .html, .css, .js, and image files, to your users. CloudFront delivers your content through a worldwide network of data centers called edge locations. When a user requests content that you’re serving with CloudFront, the request is routed to the edge location that provides the lowest latency (time delay), so that content is delivered with the best possible performance.

If the content is already in the edge location with the lowest latency, CloudFront delivers it immediately.

If the content is not in that edge location, CloudFront retrieves it from an origin that you’ve defined—such as an Amazon S3 bucket, a MediaPackage channel, or an HTTP server (for example, a web server) that you have identified as the source for the definitive version of your content.

As an example, suppose that you’re serving an image from a traditional web server, not from CloudFront. For example, you might serve an image, sunsetphoto.png, using the URL https://example.com/sunsetphoto.png.

Your users can easily navigate to this URL and see the image. But they probably don’t know that their request is routed from one network to another—through the complex collection of interconnected networks that comprise the internet—until the image is found.

CloudFront speeds up the distribution of your content by routing each user request through the AWS backbone network to the edge location that can best serve your content. Typically, this is a CloudFront edge server that provides the fastest delivery to the viewer. Using the AWS network dramatically reduces the number of networks that your users’ requests must pass through, which improves performance. Users get lower latency—the time it takes to load the first byte of the file—and higher data transfer rates.

You also get increased reliability and availability because copies of your files (also known as objects) are now held (or cached) in multiple edge locations around the world.

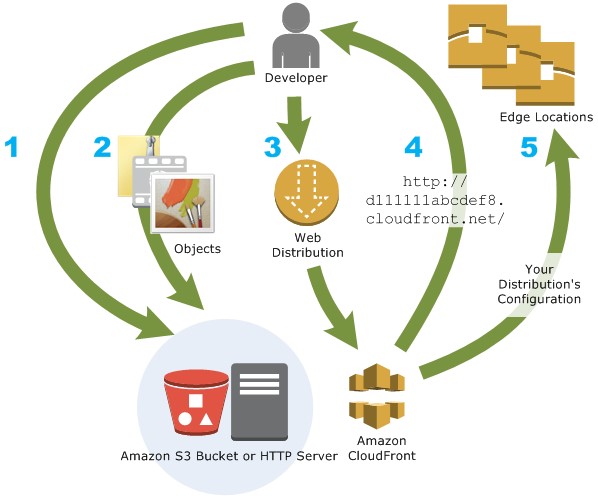

How you set up CloudFront to deliver content

You create a CloudFront distribution to tell CloudFront where you want content to be delivered from, and the details about how to track and manage content delivery. Then CloudFront uses computers—edge servers—that are close to your viewers to deliver that content quickly when someone wants to see it or use it.

How you configure CloudFront to deliver your content

- You specify origin servers, like an Amazon S3 bucket or your own HTTP server, from which CloudFront gets your files which will then be distributed from CloudFront edge locations all over the world.

An origin server stores the original, definitive version of your objects. If you’re serving content over HTTP, your origin server is either an Amazon S3 bucket or an HTTP server, such as a web server. Your HTTP server can run on an Amazon Elastic Compute Cloud (Amazon EC2) instance or on a server that you manage; these servers are also known as custom origins.

- You upload your files to your origin servers. Your files, also known as objects, typically include web pages, images, and media files, but can be anything that can be served over HTTP.

If you’re using an Amazon S3 bucket as an origin server, you can make the objects in your bucket publicly readable, so that anyone who knows the CloudFront URLs for your objects can access them. You also have the option of keeping objects private and controlling who accesses them. See Serving private content with signed URLs and signed cookies.

- You create a CloudFront distribution, which tells CloudFront which origin servers to get your files from when users request the files through your web site or application. At the same time, you specify details such as whether you want CloudFront to log all requests and whether you want the distribution to be enabled as soon as it’s created.

- CloudFront assigns a domain name to your new distribution that you can see in the CloudFront console, or that is returned in the response to a programmatic request, for example, an API request. If you like, you can add an alternate domain name to use instead.

- CloudFront sends your distribution’s configuration (but not your content) to all of its edge locations or points of presence (POPs)— collections of servers in geographically-dispersed data centers where CloudFront caches copies of your files.

As you develop your website or application, you use the domain name that CloudFront provides for your URLs. For example, if CloudFront returns d111111abcdef8.cloudfront.net as the domain name for your distribution, the URL for logo.jpg in your Amazon S3 bucket (or in the root directory on an HTTP server) is https://d111111abcdef8.cloudfront.net/logo.jpg.

Or you can set up CloudFront to use your own domain name with your distribution. In that case, the URL might be https://www.example.com/logo.jpg.

Optionally, you can configure your origin server to add headers to the files, to indicate how long you want the files to stay in the cache in CloudFront edge locations. By default, each file stays in an edge location for 24 hours before it expires. The minimum expiration time is 0 seconds; there isn’t a maximum expiration time.

What is Geo-Targeting in CloudFront?

Geo-Targeting enables the creation of customized content based on the geographic location of the user. This allows you to serve the content which is more relevant to a user. For example, using Geo-Targeting, you can show the news related to local body elections to a user sitting in India, which you may not want to show to a user sitting in the US. Similarly, the news related to Baseball Tournaments can be more relevant to a user sitting in the US, and not so relevant for a user sitting in India.

What is AWS Secrets Manager?

AWS Secrets Manager is a secrets management service that helps you protect access to your applications, services, and IT resources. This service enables you to easily rotate, manage, and retrieve database credentials, API keys, and other secrets throughout their lifecycle. Using Secrets Manager, you can secure and manage secrets used to access resources in the AWS Cloud, on third-party services, and on-premises.

Why should I use AWS Secrets Manager?

AWS Secrets Manager protects access to your applications, services, and IT resources, without the upfront investment and ongoing maintenance costs of operating your own infrastructure.

Secrets Manager is for IT administrators looking for a secure and scalable method to store and manage secrets. Security administrators responsible for meeting regulatory and compliance requirements can use Secrets Manager to monitor secrets and rotate secrets without risk of impacting applications. Developers who want to replace hardcoded secrets in their applications can retrieve secrets programmatically from Secrets Manager.

What secrets can I manage in AWS Secrets Manager?

You can manage secrets such as database credentials, on-premises resource credentials, SaaS application credentials, third-party API keys, and Secure Shell (SSH) keys. Secrets Manager enables you to store a JSON document which allows you to manage any text blurb that is 64 KB or smaller.

How do I get started with AWS Secrets Manager?

To get started with AWS Secrets Manager:

- Identify your secrets and locate where they are used in your applications.

- Sign in to the AWS Management Console using your AWS credentials and navigate to the Secrets Manager console.

- Use the Secrets Manager console to upload the secret you identified. Alternatively, you can use the AWS SDK or AWS CLI to upload a secret (once per secret). You can also write a script to upload multiple secrets.

- If your secret is not in use yet, follow the instructions on the console to configure automatic rotation. If applications are using your secret, complete steps (5) and (6) before configuring automatic rotation.

- If other users or applications need to retrieve the secret, write an IAM policy to grant permissions to the secret.

- Update your applications to retrieve secrets from Secrets Manager.

What is Amazon EKS ?

Amazon Elastic Kubernetes Service (Amazon EKS) is a managed Kubernetes service that makes it easy for you to run Kubernetes on AWS and on-premises. Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. Amazon EKS is certified Kubernetes-conformant, so existing applications that run on upstream Kubernetes are compatible with Amazon EKS.

Amazon EKS automatically manages the availability and scalability of the Kubernetes control plane nodes responsible for scheduling containers, managing application availability, storing cluster data, and other key tasks.

Amazon EKS lets you run your Kubernetes applications on both Amazon Elastic Compute Cloud (Amazon EC2) and AWS Fargate. With Amazon EKS, you can take advantage of all the performance, scale, reliability, and availability of AWS infrastructure, as well as integrations with AWS networking and security services, such as application load balancers (ALBs) for load distribution, AWS Identity and Access Management (IAM) integration with role-based access control (RBAC), and AWS Virtual Private Cloud (VPC) support for pod networking.

What is Amazon ECR and ECS ?

Amazon Elastic Container Registry (Amazon ECR) is an Amazon Web Service (AWS) product that stores, manages and deploys Docker images, which are managed clusters of Amazon EC2 instances. Amazon ECR allows all AWS developers to save configurations and quickly move them into a production environment, thus reducing overall workloads.

Amazon ECR provides a command-line interface (CLI) and APIs to manage repositories and integrated services, such as Amazon Elastic Container Service (Amazon ECS), which installs and manages the infrastructure for these containers. The primary difference between Amazon ECR and ECS is that while ECR provides the repository that stores all code that has been written and packaged as a Docker image, the ECS takes these files and actively uses them in the deployment of applications.

A developer can use the Docker command line interface to push or pull container images to or from an AWS region. Amazon ECR can be used wherever a Docker container service is running, including on-premises environments.

Your organization has decided to have all their workload on the public cloud. But, due to certain security concerns, your organization decides to distribute some of the workload on private servers. You are asked to suggest a cloud architecture for your organization. What will be your suggestion?

A hybrid cloud. The hybrid cloud architecture is where an organization can use the public cloud for shared resources and the private cloud for its confidential workloads.

Your organization wants to send and receive compliance emails to its clients using its own email address and domain. What service would you suggest for achieving the same in an easy and cost-effective way?

Amazon Simple Email Service (Amazon SES), which is a cloud-based email sending service, can be used for this purpose.

What is Amazon Network Firewall?

This AWS service helps to protect VPCs (Virtual Private Cloud) against attacks. In this service, scaling is carried out automatically as per the traffic flow in the network. You can define your firewall rules using Network Firewall’s flexible rules engine; therefore, you can get reasonable control over the network traffic. Network Firewall can work alongside the AWS firewall manager to build and apply security policies on all VPCs and accounts.

What is the name of Amazon’s Content Delivery Network ?

Amazon CloudFront

Which of the following services can be used if you want to capture client connection information from your load balancer at a particular time interval?

– Enabling access logs on your load balancer

- Enabling CloudTrail for your load balancer

- Enabling CloudWatch metrics for your load balancer

Enabling CloudTrail for your load balancer. AWS CloudTrail is an inexpensive log monitoring solution provided by Amazon. It can provide logging information for load balancers or any other AWS resources. The provided information can further be used for analysis.

Which service provided by AWS can you use to transfer objects from your data center, when you are using Amazon CloudFront?

Amazon Direct Connect. It is an AWS networking service that acts as an alternative to using the Internet to connect customers in on-premise sites with AWS.

If in CloudFront the content is not present at an edge location, what will happen when a request is made for that content?

CloudFront will deliver the content directly from the origin server. It will also store the content in the cache of the edge location where the content was missing.

What are the native AWS security logging capabilities?

The native AWS security logging capabilities include AWS CloudTrail, AWS Config, AWS detailed billing reports, Amazon S3 access logs, Elastic load balancing Access logs, Amazon CloudFront access logs, Amazon VPC Flow logs, etc

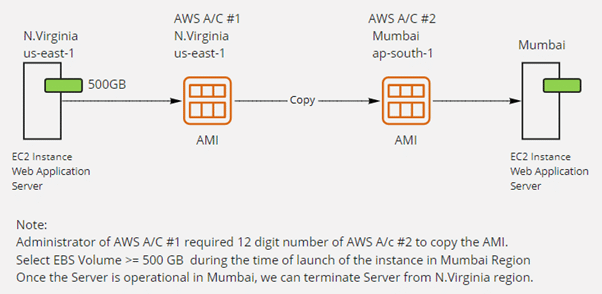

A Company has a running Web Application Server in the N. Virginia region and the server has a large size EBS volume of approximately 500 GB, and to see the demand of business, the company needs to migrate the server from the current region to another AWS account’s Mumbai location. Which is the best way to migrate the server from the current location to the Mumbai region? And what information AWS administrator does require about AWS A/C?

Create an AMI of the server running in the North Virginia region. Once the AMI is created, The administrator would need the 12 digit account number of the #2 AWS account. This is required for copying the AMI which we have created.

Once the AMI is successfully copied into the Mumbai region, you can launch the instance using copied AMI in the Mumbai region. Once the instance is running and if it’s completely operational, the server in the North Virginia region could be terminated. This is the best way to migrate a server to a different account without any hassle.

Unable to ping Instance We launched a Windows 2019 IIS server in the Ohio region and deployed a dynamic website in this server, in addition, the webserver also connected with a backend MS-SQL server to store and access data related to the application. Our users were able to access the website over the Internet. The next day our client informed us that they were able to access the website but weren’t able to ping the server from the Internet. To ensure ICMP rule in Security Group, we checked, and the Security Group had allowed rule from 0.0.0.0/0. Would you try to help troubleshoot the issue?

If the client is able to access the website from his/her end, it means the connection is perfect and no issue with connectivity and the Security Group configuration also seems correct.

We can check the internal firewall of the Windows 2019 IIS server. If it is blocking ICMP traffic, we should enable it.

A start-up company has a web application based in the us-east-1 Region with multiple Amazon EC2 instances running behind an Application Load Balancer across multiple Availability Zones. As the company’s user base grows in the us-west-1 region, the company needs a solution with low latency and improved high availability. What should a solutions architect do to achieve it.?

You need to notice here, currently, the web application is in us-ease-1, and the user base grows in the us-east-1 region. The very first step, provision multiple EC2 instances (web application servers) and configure an Application Load Balancer in us-west-1. Now, create Global Accelerator in AWS Global Accelerator which uses an endpoint group that includes the load balancer endpoints in both Regions.

A company currently operates a web application backed by an Amazon RDS MySQL database. It has automated backups that are run daily and are not encrypted. A security audit requires future backups to be encrypted and unencrypted backups to be destroyed. The company will make at least one encrypted backup before destroying the old backups. What should be done to enable encryption for future backups?

- Create a snapshot of the database.

- Copy it to an encrypted snapshot.

- Restore the database from the encrypted snapshot.

A company is going to launch one branch in the UK and need to continue with its existing main branch in the USA. The company has almost 15 GB of data which is stored in an S3 Bucket in the Ohio region and data is stored with the default storage class. The Company also wants to provide its updated & stored data in the London S3 bucket using one zone accessibility storage class to save storage costs. In addition, the company also wants that the data must be updated automatically in S3’s London bucket; if any data is modified or written in the S3 bucket in Ohio.

Configure Cross Region Replication Rule in Ohio region bucket and select the destination bucket in the London region to replicate the data and store it in the destination using one zone IA storage class to save cost.

The data on the root volumes of store-backed and EBS-backed instances get deleted by default when they are terminated. If you want to prevent that from happening, which instance would you use? And ensure if the EC2 instance is restarted, the data or configuration in the EC2 instance should not be lost.

EBS-backed instances or instances with EBS Volume. EBS-backed instances use EBS volume as their root volume. These volumes contain Operating Systems, Applications, and Data. We can create Snapshots from these volumes or AMI from Snapshots.

The main advantage of EBS-backed volume is that the data can be configured to be stored for later retrieval even if the virtual machine or the instances are shut down.

You have an application running on an EC2 instance. You need to reduce the load on your instance as soon as the CPU utilization reaches 80 percent. How will you accomplish the job?

It can be done by creating an autoscaling group to deploy more instances when the CPU utilization of the EC2 instance exceeds 80 percent and distributing traffic among instances by creating an application load balancer and registering EC2 instances as target instances.

In AWS, three different storage services are available, such as EFS, S3, and EBS. When should I use Amazon EFS vs. Amazon S3 vs. Amazon Elastic Block Store (EBS)?

Amazon Web Services (AWS) offers cloud storage services to support a wide range of storage workloads.

Amazon EFS is a file storage service for use with Amazon compute (EC2, containers, serverless) and on-premises servers. Amazon EFS provides a file system interface, file system access semantics (such as strong consistency and file locking), and concurrently accessible storage for up to thousands of Amazon EC2 instances.

Amazon EBS is a block-level storage service for use with Amazon EC2. Amazon EBS can deliver performance for workloads that require the lowest latency access to data from a single EC2 instance.

Amazon S3 is an object storage service. Amazon S3 makes data available through an Internet API that can be accessed anywhere.

A company’s web application is using multiple Linux Amazon EC2 instances and storing data on Amazon EBS volumes. The company is looking for a solution to increase the resiliency of the application in case of a failure and to provide storage that complies with atomicity, consistency, isolation, and durability (ACID). What should a solutions architect do to meet these requirements?

Create an Application Load Balancer with AWS Auto Scaling groups across multiple Availability Zones. Store data on Amazon EFS and mount a target on each instance.

An application running on AWS uses an Amazon Aurora Multi-AZ deployment for its database. When evaluating performance metrics, a solutions architect discovered that the database reads are causing high I/O and adding latency to the write requests against the database. What should the solutions architect do to separate the read requests from the write requests?

Create a read replica and modify the application to use the appropriate endpoint.

A client reports that they wanted to see an audit log of any changes made to AWS resources in their account. What can the client do to achieve this?

Enable AWS CloudTrail logs to be delivered to an Amazon S3 bucket.

Usually, you have noticed that one EBS volume can be connected with one EC2 instance, our company wants to run a business-critical application on multiple instances in a single region and need to store all instances output in single storage within the VPC. Instead of using EFS, our company is recommending the use of multi-attach volume with instances. As an architect, you need to suggest them what instance type and EBS volumes they should use.

The instance type should be EC2 Nitro-based instances and Provisioned IOPs io1 multi-attach EBS volumes.

A company is using a VPC peering connection option to connect its multiple VPCs in a single region to allow for cross VPC communication. A recent increase in account creations and VPCs has made it difficult to maintain the VPC peering strategy, and the company expects to grow to hundreds of VPCs. There are also new requests to create site-to-site VPNs with some of the VPCs. A solutions architect has been tasked with creating a centrally networking setup for multiple accounts and VPNs. Which networking solution would you recommend to resolve it?

Configure a transit gateway with AWS Transit Gateway and connect all VPCs and VPNs.

An organization has multiple facilities in various continents such as North America, Europe, and the Asia Pacific. The organization is designing a new distributed application to manage and optimize its global supply chain and its manufacturing process. It needs to design the process in such a way that the booked order in one continent should be able to support data failover with a short Recovery Time Objective (RTO). The uptime of the application should not impact manufacturing, what kind of solution would you recommend as a solution architect?

Use Amazon DynamoDB global tables feature for the database

Click here to find interview Questions and Answers on other topics related to DevOps