What is Hypervisor?

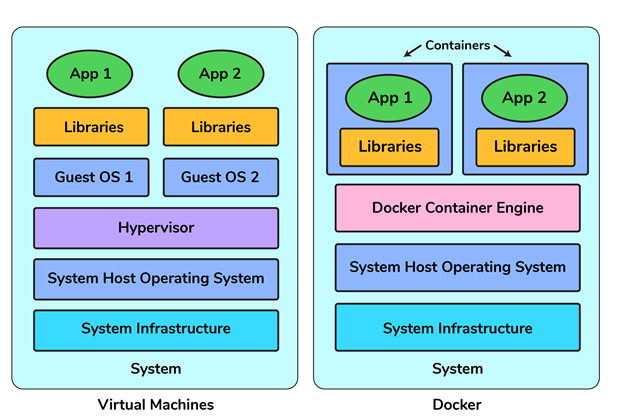

A hypervisor is a software that makes virtualization possible. It is also called Virtual Machine Monitor. It divides the host system and allocates the resources to each divided virtual environment. You can basically have multiple OS on a single host system. There are two types of Hypervisors:

- Type 1: It’s also called Native Hypervisor or Bare metal Hypervisor. It runs directly on the underlying host system. It has direct access to your host’s system hardware and hence does not require a base server operating system.

- Type 2: This kind of hypervisor makes use of the underlying host operating system. It’s also called Hosted Hypervisor.

What is virtualization?

Virtualization is the process of creating a software-based, virtual version of something(compute storage, servers, application, etc.). These virtual versions or environments are created from a single physical hardware system. Virtualization lets you split one system into many different sections which act like separate, distinct individual systems. A software called Hypervisor makes this kind of splitting possible. The virtual environment created by the hypervisor is called Virtual Machine.

What is containerization?

In the software development process, code developed on one machine might not work perfectly fine on any other machine because of the dependencies. This problem was solved by the containerization concept. So basically, an application that is being developed and deployed is bundled and wrapped together with all its configuration files and dependencies. This bundle is called a container. Now when you wish to run the application on another system, the container is deployed which will give a bug-free environment as all the dependencies and libraries are wrapped together. Most famous containerization environments are Docker and Kubernetes.

Difference between virtualization and containerization

Containers provide an isolated environment for running the application. The entire user space is explicitly dedicated to the application. Any changes made inside the container are never reflected on the host or even on other containers running on the same host. Containers are an abstraction of the application layer. Each container is a different application.

Whereas in Virtualization, hypervisors provide an entire virtual machine to the guest (including Kernal). Virtual machines are an abstraction of the hardware layer. Each VM is a physical machine.

What is Docker?

Docker is a containerization platform that packages your application and all its dependencies together in the form of containers so as to ensure that your application works seamlessly in any environment, be it development, test, or production. Docker containers, wrap a piece of software in a complete filesystem that contains everything needed to run, code, runtime, system tools, system libraries, etc. It wraps basically anything that can be installed on a server. This guarantees that the software will always run the same, regardless of its environment.

What is a Docker Container?

Docker containers include the application and all of its dependencies. It shares the kernel with other containers, running as isolated processes in user space on the host operating system. Docker containers are not tied to any specific infrastructure: they run on any computer, on any infrastructure, and in any cloud. Docker containers are basically runtime instances of Docker images.

What are Docker’s most notable features?

Docker’s most essential features include:

- Application agility

- Developer productivity

- Easy modeling

- Operational efficiencies

- Placement and affinity

- Version control

Why should anyone use Docker? What does it offer?

Docker gives users many incentives for adoption, such as:

- An efficient and easy initial set up experience

- The means to describe an application lifecycle in detail

- Simple configuration and smooth interaction with Docker Compose

- Complete and well-detailed documentation

- Ability to run on a PC or enterprise IT system with equal ease

What about the opposite? Does Docker have any downsides?

Docker isn’t perfect. It comes with its share of drawbacks, including:

- Lacks a storage option

- Monitoring options are less than ideal

- You can’t automatically reschedule inactive nodes

- Automatic horizontal scaling set up is complicated

Name and explain the various Docker components.

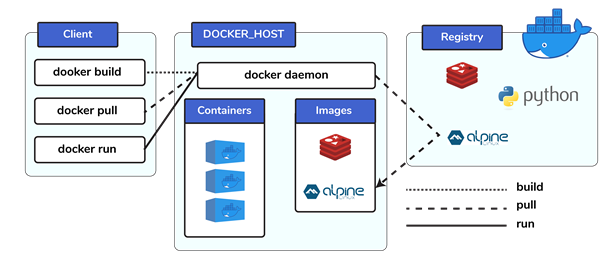

The three main Docker components are:

- Docker Client. Performs Docker build pull and run operations to open up communication with the Docker Host. The Docker command then employs Docker API to call any queries to run.

- Docker Host. Contains Docker daemon, containers, and associated images. The Docker daemon establishes a connection with the Registry. The stored images are the type of metadata dedicated to containerized applications.

- Registry. This is where Docker images are stored. There are two of them, a public registry and a private one. Docker Hub and Docker Cloud are two public registries available for use by anyone.

What are Docker Images?

Docker image is the source of Docker container. In other words, Docker images are used to create containers. When a user runs a Docker image, an instance of a container is created. These docker images can be deployed to any Docker environment.

Explain Registries

There are two types of registry is

- Public Registry

- Private Registry

Docker’s public registry is called Docker hub, which allows you to store images privately. In Docker hub, you can store millions of images.

What is Docker Hub?

Docker images create docker containers. There has to be a registry where these docker images live. This registry is Docker Hub. Users can pick up images from Docker Hub and use them to create customized images and containers. Currently, the Docker Hub is the world’s largest public repository of image containers.

Explain Docker Architecture?

Docker Architecture consists of a Docker Engine which is a client-server application with three major components:

- A server which is a type of long-running program called a daemon process (the docker command).

- A REST API which specifies interfaces that programs can use to talk to the daemon and instruct it what to do.

- A command line interface (CLI) client (the docker command).

- The CLI uses the Docker REST API to control or interact with the Docker daemon through scripting or direct CLI commands. Many other Docker applications use the underlying API and CLI.

Refer to this blog, to read more about Docker Architecture.

What is a Dockerfile?

Let’s start by giving a small explanation of Dockerfile and proceed by giving examples and commands to support your arguments.

Docker can build images automatically by reading the instructions from a file called Dockerfile. A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. Using docker build, users can create an automated build that executes several command-line instructions in succession.

Tell us something about Docker Compose.

Docker Compose is a YAML file which contains details about the services, networks, and volumes for setting up the Docker application. So, you can use Docker Compose to create separate containers, host them and get them to communicate with each other. Each container will expose a port for communicating with other containers.

What is Docker Swarm?

You are expected to have worked with Docker Swarm as it’s an important concept of Docker.

Docker Swarm is native clustering for Docker. It turns a pool of Docker hosts into a single, virtual Docker host. Docker Swarm serves the standard Docker API, any tool that already communicates with a Docker daemon can use Swarm to transparently scale to multiple hosts.

What is a Docker Namespace?

A namespace is one of the Linux features and an important concept of containers.

Namespace adds a layer of isolation in containers.

Docker provides various namespaces in order to stay portable and not affect the underlying host system. Few namespace types supported by Docker – PID, Mount, IPC, User, Network

What is the lifecycle of a Docker Container?

Docker containers have the following lifecycle:

- Create a container

- Run the container

- Pause the container(optional)

- Un-pause the container(optional)

- Start the container

- Stop the container

- Restart the container

- Kill the container

- Destroy the container

What is Docker Machine?

Docker machine is a tool that lets you install Docker Engine on virtual hosts. These hosts can now be managed using the docker-machine commands. Docker machine also lets you provision Docker Swarm Clusters.

How to check for Docker Client and Docker Server versions?

The following command gives you information about Docker Client and Server versions:

$ docker version

How do you get the number of containers running, paused and stopped?

You can use the following command to get detailed information about the docker installed on your system.

$ docker info

You can get the number of containers running, paused, stopped, the number of images and a lot more.

If you vaguely remember the command and you’d like to confirm it, how will you get help on that particular command?

The following command is very useful as it gives you help on how to use a command, the syntax, etc.

$ docker –help

The above command lists all Docker commands. If you need help with one specific command, you can use the following syntax:

$ docker <command> –help

How to login into the docker repository?

You can use the following command to login into hub.docker.com:

$ docker login

You’ll be prompted for your username and password, insert those and congratulations, you’re logged in.

If you wish to use a base image and make modifications or personalize it, how do you do that?

You pull an image from docker hub onto your local system

It’s one simple command to pull an image from docker hub:

$ docker pull <image_name>

How do you create a docker container from an image?

Pull an image from docker repository with the above command and run it to create a container. Use the following command:

$ docker run -it -d <image_name>

Most probably the next question would be, what does the ‘-d’ flag mean in the command?

-d means the container needs to start in the detached mode.

How do you list all the running containers?

The following command lists down all the running containers:

$ docker ps

Suppose you have 3 containers running and out of these, you wish to access one of them. How do you access a running container?

The following command lets us access a running container:

$ docker exec -it <container id> bash

The exec command lets you get inside a container and work with it.

How to start, stop and kill a container?

The following command is used to start a docker container:

$ docker start <container_id>

and the following for stopping a running container:

$ docker stop <container_id>

kill a container with the following command:

$ docker kill <container_id>

Can you use a container, edit it, and update it? Also, how do you make it a new and store it on the local system?

Of course, you can use a container, edit it and update it. This sounds complicated but its actually just one command.

$ docker commit <conatainer id> <username/imagename>

Once you’ve worked with an image, how do you push it to docker hub?

$ docker push <username/image name>

How to delete a stopped container?

Use the following command to delete a stopped container:

$ docker rm <container id>

How to delete an image from the local storage system?

The following command lets you delete an image from the local system:

$ docker rmi <image-id>

How to build a Dockerfile?

Once you’ve written a Dockerfile, you need to build it to create an image with those specifications. Use the following command to build a Dockerfile:

$ docker build <path to docker file>

The next question would be when do you use “.dockerfile_name” and when to use the entire path?

Use “.dockerfile_name” when the dockerfile exits in the same file directory and you use the entire path if it lives somewhere else.

Do you know why docker system prune is used? What does it do?

$ docker system prune

The above command is used to remove all the stopped containers, all the networks that are not used, all dangling images and all build caches. It’s one of the most useful docker commands.

Will you lose your data, when a docker container exists?

No, you won’t lose any data when Docker container exits. Any data that your application writes to the container gets preserved on the disk until you explicitly delete the container. The file system for the container persists even after the container halts.

Where all do you think Docker is being used?

When asked such a question, respond by talking about applications of Docker. Docker is being used in the following areas:

- Simplifying configuration: Docker lets you put your environment and configuration into code and deploy it.

- Code Pipeline Management: There are different systems used for development and production. As the code travels from development to testing to production, it goes through a difference in the environment. Docker helps in maintaining the code pipeline consistency.

- Developer Productivity: Using Docker for development gives us two things – We’re closer to production and development environment is built faster.

- Application Isolation: As containers are applications wrapped together with all dependencies, your apps are isolated. They can work by themselves on any hardware that supports Docker.

- Debugging Capabilities: Docker supports various debugging tools that are not specific to containers but work well with containers.

- Multi-tenancy: Docker lets you have multi-tenant applications avoiding redundancy in your codes and deployments.

- Rapid Deployment: Docker eliminates the need to boost an entire OS from scratch, reducing the deployment time.

How is Docker different from other containerization methods?

Docker containers are very easy to deploy in any cloud platform. It can get more applications running on the same hardware when compared to other technologies, it makes it easy for developers to quickly create, ready-to-run containerized applications and it makes managing and deploying applications much easier. You can even share containers with your applications.

If you have some more points to add you can do that but make sure the above explanation is there in your answer.

Can I use JSON instead of YAML for my compose file in Docker?

You can use JSON instead of YAML for your compose file, to use JSON file with composing, specify the JSON filename to use, for eg:

$ docker-compose -f docker-compose.json up

How many containers you can run in docker and what are the factors influencing this limit?

There is no clearly defined limit to the number of containers that can be run within docker. But it all depends on the limitations – more specifically hardware restrictions. The size of the app and the CPU resources available are 2 important factors influencing this limit. In case your application is not very big and you have abundant CPU resources, then we can run a huge number of containers

There can be as many containers as you wish per host. Docker does not put any restrictions on it. But you need to consider every container needs storage space, CPU and memory which the hardware needs to support. You also need to consider the application size. Containers are considered to be lightweight but very dependant on the host OS.

How have you used Docker in your previous position?

Explain how you have used Docker to help rapid deployment. Explain how you have scripted Docker and used it with other tools like Puppet, Chef or Jenkins. If you have no past practical experience in Docker and instead have experience with other tools in a similar space, be honest and explain the same. In this case, it makes sense if you can compare other tools to Docker in terms of functionality.

How far do Docker containers scale? Are there any requirements for the same?

Large web deployments like Google and Twitter and platform providers such as Heroku and dotCloud, all run on container technology. Containers can be scaled to hundreds of thousands or even millions of them running in parallel. Talking about requirements, containers require the memory and the OS at all the times and a way to use this memory efficiently when scaled.

What platforms does docker run on?

This is a very straightforward question but can get tricky.

Docker runs on various Linux administration:

- Ubuntu 12.04, 13.04 et al

- Fedora 19/20+

- RHEL 6.5+

- CentOS 6+

- Gentoo

- ArchLinux

- openSUSE 12.3+

- CRUX 3.0+

It can also be used in production with Cloud platforms with the following services:

- Amazon EC2

- Amazon ECS

- Google Compute Engine

- Microsoft Azure

- Rackspace

Is there a way to identify the status of a Docker container?

There are six possible states a container can be at any given point – Created, Running, Paused, Restarting, Exited, Dead.

Use the following command to check for docker state at any given point:

$ docker ps

The above command lists down only running containers by default. To look for all containers, use the following command:

$ docker ps -a

Can you remove a paused container from Docker?

The answer is no. You cannot remove a paused container. The container has to be in the stopped state before it can be removed.

Can a container restart by itself?

No, it’s not possible for a container to restart by itself. By default the flag -restart is set to false.

Differentiate between COPY and ADD commands that are used in a Dockerfile?

Both the commands have similar functionality, but COPY is more preferred because of its higher transparency level than that of ADD.

COPY provides just the basic support of copying local files into the container whereas ADD provides additional features like remote URL and tar extraction support.

Is it better to directly remove the container using the rm command or stop the container followed by remove container?

Its always better to stop the container and then remove it using the remove command.

Stopping the container and then removing it will allow sending SIG_HUP signal to recipients. This will ensure that all the containers have enough time to clean up their tasks. This method is considered a good practice, avoiding unwanted errors.

$ docker stop <coontainer_id>

$ docker rm -f <container_id>

Can you tell the what are the purposes of up, run, and start commands of docker compose?

- Using the up command for keeping a docker-compose up (ideally at all times), we can start or restart all the networks, services, and drivers associated with the app that are specified in the docker-compose.yml file. Now if we are running the docker-compose up in the “attached” mode then all the logs from the containers would be accessible to us. In case the docker-compose is run in the “detached” mode, then once the containers are started, it just exits and shows no logs.

- Using the run command, the docker-compose can run one-off or ad-hoc tasks based on the business requirements. Here, the service name has to be provided and the docker starts only that specific service and also the other services to which the target service is dependent (if any).

– This command is helpful for testing the containers and also performing tasks such as adding or removing data to the container volumes etc. - Using the start command, only those containers can be restarted which were already created and then stopped. This is not useful for creating new containers on its own.

What are the basic requirements for the docker to run on any system?

Docker can run on both Windows and Linux platforms.

- For the Windows platform, docker atleast needs Windows 10 64bit with 2GB RAM space. For the lower versions, docker can be installed by taking help of the toolbox. Docker can be downloaded from https://docs.docker.com/docker-for-windows/ website.

- For Linux platforms, Docker can run on various Linux flavors such as Ubuntu >=12.04, Fedora >=19, RHEL >=6.5, CentOS >=6 etc.

List the most commonly used instructions in Dockerfile?

- FROM: This is used to set the base image for upcoming instructions. A docker file is considered to be valid if it starts with the FROM instruction.

- LABEL: This is used for the image organization based on projects, modules, or licensing. It also helps in automation as we specify a key-value pair while defining a label that can be later accessed and handled programmatically.

- RUN: This command is used to execute instructions following it on the top of the current image in a new layer. Note that with each RUN command execution, we add layers on top of the image and then use that in subsequent steps.

- CMD: This command is used to provide default values of an executing container. In cases of multiple CMD commands the last instruction would be considered.

Explain CMD and ENTRYPOINT.

1. Overview

In a Dockerfile, we often encounter instructions like run, cmd, or entrypoint. At first glance, they are all used for specifying and running commands. But what’s the difference between them? And how do they interact with one another?

In this tutorial, we’ll answer these questions. We’ll present what each of these instructions does and how they work. We’ll also look at what role they play in building an image and running a Docker container.

2. Setup

To start, let’s create a script, log-event.sh. It simply adds one line to a file and then prints it:

#!/bin/sh

echo `date` $@ >> log.txt;

cat log.txt;

And now, let’s create a simple Dockerfile:

FROM alpine

ADD log-event.sh /

It’ll make use of our script by appending lines to log.txt in different scenarios.

3. The run Command

The run instruction executes when we build the image. That means the command passed to run executes on top of the current image in a new layer. Then the result is committed to the image. Let’s see how this looks in action.

Firstly, we’ll add a run instruction to our Dockerfile:

FROM alpine

ADD log-event.sh /

RUN [“/log-event.sh”, “image created”]

Secondly, let’s build our image with:

docker build -t myimage .

Now we expect to have a Docker image containing a log.txt file with one image created line inside. Let’s check this by running a container based on the image:

docker run myimage cat log.txt

When listing the contents of the file, we’ll see an output like this:

Fri Sep 18 20:31:12 UTC 2020 image created

If we run the container several times, we’ll see that the date in our log file doesn’t change. This makes sense because the run step executes at image build time, not at the container runtime.

Let’s now build our image again. We notice the creation time in our log didn’t change. This happens because Docker caches the result for the run instruction if the Dockerfile didn’t change. If we want to invalidate the cache, we need to pass the –no-cache option to the build command.

4. The cmd Command

With the cmd instruction, we can specify a default command that executes when the container is starting. Let’s add a cmd entry to our Dockerfile and see how it works:

…

RUN [“/log-event.sh”, “image created”]

CMD [“/log-event.sh”, “container started”]

After building the image, let’s now run it and check the output:

$ docker run myimage

Fri Sep 18 18:27:49 UTC 2020 image created

Fri Sep 18 18:34:06 UTC 2020 container started

If we run this multiple times, we’ll see that the image created entry stays the same. But the container started entry updates with every run. This shows how cmd indeed executes every time the container starts.

Notice we’ve used a slightly different docker run command to start our container this time. Let’s see what happens if we run the same command as before:

$ docker run myimage cat log.txt

Fri Sep 18 18:27:49 UTC 2020 image created

This time the cmd specified in the Dockerfile is ignored. That’s because we have specified arguments to the docker run command.

Let’s move on now and see what happens if we have more than one cmd entry in the Dockerfile. Let’s add a new entry that will display another message:

…

RUN [“/log-event.sh”, “image created”]

CMD [“/log-event.sh”, “container started”]

CMD [“/log-event.sh”, “container running”]

After building the image and running the container again, we’ll find the following output:

$ docker run myimage

Fri Sep 18 18:49:44 UTC 2020 image created

Fri Sep 18 18:49:58 UTC 2020 container running

As we can see, the container started entry is not present, only the container running is. That’s because only the last cmd is invoked if more than one is specified.

5. The entrypoint Command

As we saw above, cmd is ignored if passing any arguments when starting the container. What if we want more flexibility? Let’s say we want to customize the appended text and pass it as an argument to the docker run command. For this purpose, let’s use entrypoint. We’ll specify the default command to run when the container starts. Moreover, we’re now able to provide extra arguments.

Let’s replace the cmd entry in our Dockerfile with entrypoint:

…

RUN [“/log-event.sh”, “image created”]

ENTRYPOINT [“/log-event.sh”]

Now let’s run the container by providing a custom text entry:

$ docker run myimage container running now

Fri Sep 18 20:57:20 UTC 2020 image created

Fri Sep 18 20:59:51 UTC 2020 container running now

We can see how entrypoint behaves similarly to cmd. And in addition, it allows us to customize the command executed at startup.

Like with cmd, in case of multiple entrypoint entries, only the last one is considered.

6. Interactions Between cmd and entrypoint

We have used both cmd and entrypoint to define the command executed when running the container. Let’s now move on and see how to use cmd and entrypoint in combination.

One such use-case is to define default arguments for entrypoint. Let’s add a cmd entry after entrypoint in our Dockerfile:

…

RUN [“/log-event.sh”, “image created”]

ENTRYPOINT [“/log-event.sh”]

CMD [“container started”]

Now, let’s run our container without providing any arguments, and with the defaults specified in cmd:

$ docker run myimage

Fri Sep 18 21:26:12 UTC 2020 image created

Fri Sep 18 21:26:18 UTC 2020 container started

We can also override them if we choose so:

$ docker run myimage custom event

Fri Sep 18 21:26:12 UTC 2020 image created

Fri Sep 18 21:27:25 UTC 2020 custom event

Something to note is the different behavior of entrypoint when used in its shell form. Let’s update the entrypoint in our Dockerfile:

…

RUN [“/log-event.sh”, “image created”]

ENTRYPOINT /log-event.sh

CMD [“container started”]

In this situation, when running the container, we’ll see how Docker ignores any arguments passed to either docker run or cmd.

Difference between CMD and ENTRYPOINT in Docker

They both specify programs that execute when the container starts running, but:

CMD commands are ignored by Daemon when there are parameters stated within the docker run command.

ENTRYPOINT instructions are not ignored but instead are appended as command line parameters by treating those as arguments of the command.

- When to use CMD

The best way to use a CMD instruction is by specifying default programs that should run when users do not input arguments in the command line.

This instruction ensures the container is in a running state by starting an application as soon as the container image is run. By doing so, the CMD argument loads the base image as soon as the container starts.

Additionally, in specific use-cases, a docker run command can be executed through a CLI to override instructions specified within the Dockerfile.

- When to use ENTRYPOINT

ENTRYPOINT instructions are suitable for both single-purpose and multi-mode images where there is a need for a specific command to always run when the container starts.

One of its popular use-cases is building wrapper containers-images that encapsulate legacy programs for containerization, which leverages an ENTRYPOINT instruction to ensure the program will always run.

Using ENTRYPOINT or CMD

Both ENTRYPOINT and CMD are essential for building and running Dockerfiles—it simply depends on your use case. As a general rule of thumb:

Opt for ENTRYPOINT instructions when building an executable Docker image using commands that always need to be executed.

CMD instructions are best for an additional set of arguments that act as default instructions till there is an explicit command line usage when a Docker container runs.

- Using CMD & ENTRYPOINT instructions together

While there are fundamental differences in their operations, CMD and ENTRYPOINT instructions are not mutually exclusive. Several scenarios may call for the use of their combined instructions in a Dockerfile.

A very popular use case for blending them is to automate container startup tasks. In such a case, the ENTRYPOINT instruction can be used to define the executable while using CMD to define parameters.

Will cloud overtake the use of Containerization?

Docker containers are gaining popularity but at the same time, Cloud services are giving a good fight. In my personal opinion, Docker will never be replaced by Cloud. Using cloud services with containerization will definitely hype the game. Organizations need to take their requirements and dependencies into consideration into the picture and decide what’s best for them. Most of the companies have integrated Docker with the cloud. This way they can make the best out of both the technologies.

Is it a good practice to run stateful applications on Docker?

The concept behind stateful applications is that they store their data onto the local file system. You need to decide to move the application to another machine, retrieving data becomes painful. I honestly would not prefer running stateful applications on Docker.

Suppose you have an application that has many dependant services. Will docker compose wait for the current container to be ready to move to the running of the next service?

The answer is yes. Docker compose always runs in the dependency order. These dependencies are specifications like depends_on, links, volumes_from, etc.

How will you monitor Docker in production?

Docker provides functionalities like docker stats and docker events to monitor docker in production. Docker stats provides CPU and memory usage of the container. Docker events provide information about the activities taking place in the docker daemon.

Is it a good practice to run Docker compose in production?

Yes, using docker compose in production is the best practical application of docker compose. When you define applications with compose, you can use this compose definition in various production stages like CI, staging, testing, etc.

What changes are expected in your docker compose file while moving it to production?

These are the following changes you need make to your compose file before migrating your application to the production environment:

- Remove volume bindings, so the code stays inside the container and cannot be changed from outside the container.

- Binding to different ports on the host.

- Specify a restart policy

- Add extra services like log aggregator

Have you used Kubernetes? If you have, which one would you prefer amongst Docker and Kubernetes?

Be very honest in such questions. If you have used Kubernetes, talk about your experience with Kubernetes and Docker Swarm. Point out the key areas where you thought docker swarm was more efficient and vice versa. Have a look at this blog for understanding differences between Docker and Kubernetes.

Can you tell the differences between a docker Image and Layer?

Image: This is built up from a series of read-only layers of instructions. An image corresponds to the docker container and is used for speedy operation due to the caching mechanism of each step.

Layer: Each layer corresponds to an instruction of the image’s Dockerfile. In simple words, the layer is also an image but it is the image of the instructions run.

Consider the example Dockerfile below.

FROM ubuntu:18.04

COPY . /myapp

RUN make /myapp

CMD python /myapp/app.py

Importantly, each layer is only a set of differences from the layer before it.

– The result of building this docker file is an image. Whereas the instructions present in this file add the layers to the image. The layers can be thought of as intermediate images. In the example above, there are 4 instructions, hence 4 layers are added to the resultant image.

What is the purpose of the volume parameter in a docker run command?

- The syntax of docker run when using the volumes is: docker run -v host_path:docker_path <container_name>

- The volume parameter is used for syncing a directory of a container with any of the host directories. Consider the below command as an example: docker run -v /data/app:usr/src/app myapp

The above command mounts the directory /data/app in the host to the usr/src/app directory. We can sync the container with the data files from the host without having the need to restart it. - This also ensures data security in cases of container deletion. This ensures that even if the container is deleted, the data of the container exists in the volume mapped host location making it the easiest way to store the container data.

Where are docker volumes stored in docker?

Volumes are created and managed by Docker and cannot be accessed by non-docker entities. They are stored in Docker host filesystem at /var/lib/docker/volumes/

What does the docker info command do?

The command gets detailed information about Docker installed on the host system. The information can be like what is the number of containers or images and in what state they are running and hardware specifications like total memory allocated, speed of the processor, kernel version, etc.

What are the networks that are available by default?

| bridge | It is the default network all containers connect to if you don’t specify the network yourself |

| none | connects to a container-specific network stack that lacks a network interface |

| host | connects to the host’s network stack – there will be no isolation between the host machine and the container, as far as network is concerned |

How to get Docker containers to talk to each other while running on my local host?

I have a Webapp running completely locally on my MacBook.

The Webapp has a Front End (Angular/Javascript) and a Back End (Python/Django) which implements a RESTful API.

I have Dockerized the Back End so that it is completely self-contained in a Docker Container and exposes port 8000. I map this port locally to 4026.

Now I need to Dockerize the Front End. But if I have these two docker containers running on my localhost, how can I get the FE to send HTTP requests to the BE? The FE container won’t know anything that exists outside of it. Right?

Ans -1 — You can run docker network ls to get a listing of your networks. By default, you should have one called bridge. You can either create a new one or use this one by passing –net=bridge when creating your container. From there, containers launched with the same network can communicate with each other over exposed ports.

If you use Docker Compose as has been mentioned, it will create a bridge network for you when you run docker-compose up with a name matching the folder of your project and _default appended. Each image defined in the Compose file will get launched in this network automatically.

Ans -2 — There are multiple ways to do this and the simplest answer is to use Docker-Compose. You can use Docker-compose to allow multiple services to run a server.

When you are running a docker compose file, a “default” network is created so you can just add it to the other compose file as an external network.

networks:

isolated_nw:

external: true

If you are not using Docker-Compose and running individual container than expose both services port with host and then use those services on such on links like:

docker run -p 3306:3306 mysql

docker run -p 8088:80 nginx

Now you can communicate as:

http://hostip:3306 http://hostip:8088 etc. Now you can communicate with container using hostIP.

We have several monolithic applications in our environment. But Docker only works for microservices right?

Docker can absolutely be used for to containerize monolithic apps as well as microservices based apps.

We find that most customers who are leveraging Docker containerize their legacy monolithic applications to benefit from the isolation that Docker containers provide, as well as portability. Remember Docker containers can package up any application (monolithic or distributed) and migrate workloads to any infrastructure. This portability is what enables our enterprise customers to embrace strategies like moving to the hybrid cloud.

In the case of microservices, customers typically containerize each service and use tools like Docker Compose to deploy these multi-container distributed applications into their production environment as a single running application.

We’ve even seen some companies have a hybrid environment where they are slowly restructuring their dockerized monolithic applications to become dockerized distributed applications over time.

What are the differences between the ‘docker run’ and the ‘docker create’?

- Run: create a new container of an image, and execute the container. You can create N clones of the same image. The command is: docker run IMAGE_IDand not docker run CONTAINER_ID

- Start: Launch a container previously stopped. For example, if you had stopped a database with the command docker stop CONTAINER_ID, you can relaunch the same container with the command docker start CONTAINER_ID, and the data and settings will be the same.

- Create : by using the ‘docker create’ command we can create a Docker container in the Stopped state. We can also provide it with an ID that can be stored for later usages as well.

What is the preferred way of removing containers – ‘docker rm -f’ or ‘docker stop’ then followed by a ‘docker rm’?

The best and the preferred way of removing containers from Docker is to use the ‘docker stop’, as it will allow sending a SIG_HUP signal to its recipients giving them the time that is required to perform all the finalization and cleanup tasks.

Once this activity is completed, we can then comfortably remove the container using the ‘docker rm’ command from Docker and thereby updating the docker registry as well.

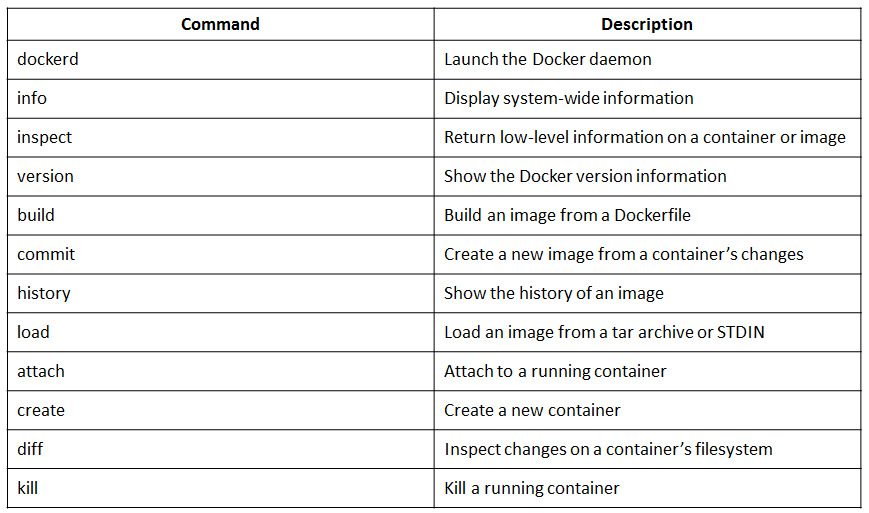

Mention some commonly used Docker command?

Below are some commonly used Docker commands:

What are the main security concerns with Docker based containers?

The Top 5 Security Risks in Docker Container Deployment

1. UNSECURED COMMUNICATION AND UNRESTRICTED NETWORK TRAFFIC

By default, in some versions of Docker, all network traffic is allowed between containers on the same host. This increases the risk of unintended and unwanted disclosure of information to other containers. Developers should only allow intercommunication that is necessary by linking specific containers. This will significantly reduce attack surfaces by restricting container access.

To protect confidentiality and integrity of all network traffic, communications with Docker registries should be encrypted through TLS security protocol.

2. UNRESTRICTED ACCESS OF PROCESS AND FILES

An attacker who gains access to one container may have the capability to gain access to other containers or the host. For example, a container may have the ability to access system file directory on the host via remounting, which is critical to security enforcement.

3. KERNEL LEVEL THREATS

Docker is designed to have all containers share the same kernel and the host. This provides convenience but also amplifies the impact of any vulnerabilities present in the kernel. System administrators should ensure that the host environment is properly hardened and patched to reduce the likelihood of privilege escalation, arbitrary code execution, and denial of services.

They should also restrict applications that run on privileged ports besides the ones that are necessary (e.g. Apache server), since those ports have more access to the kernel.

4. INCONSISTENT UPDATE AND PATCHING OF DOCKER CONTAINERS

Running an older version of Docker containers can expose internal IT environments to higher risks of breach, and potential loss of sensitive information.

New security features and bug fixes are often included in the update packages. Like any technology we run in the IT environment, a patching policy should be in place and enforced.

5. UNVERIFIED DOCKER IMAGES

Developers need to make sure they are downloading Docker images from trusted sources that are curated by the Docker community or the vendor, and run vulnerability scans against those images before running them in the host environment.

To ensure integrity of a container image, content trust should be enabled to allow digital signatures for data sent to and received from remote Docker registries.

What are the different kinds of namespaces available in a Container?

In a Container we have an isolated environment with namespace for each resource that a kernel provides. There are mainly six types of namespaces in a Container.

UTS Namespace: UTS stands for Unix Timesharing System. In UTS namespace every container gets its own hostname and domain name.

Mount Namespace: This namespace provides its own file system within a container. With this namespace we get root like / in the file system on which rest of the file structure is based.

PID Namespace: This namespace contains all the processes that run within a Container. We can run ps command to see the processes that are running within a Docker container.

IPC Namespace: IPC stands for Inter Process Communication. This namespace covers shared memory, semaphores, named pipes etc resources that are shared by processes. The items in this namespace do not cross the container boundary.

User Namespace: This namespace contains the users and groups that are defined within a container.

Network Namespace: With this namespace, container provides its own network resources like- ports, devices etc. With this namespace, Docker creates an independent network stack within each container.

Docker command to delete all running and stopped containers:

docker rm -f $(docker ps -aq)

docker rm -f $( docker ps -aq)

docker rmi -f $( docker images -aq) — command to delete all the local images

Docker CMDs:

- docker version — command to find docker version installed.

- docker info

- docker — command to list commands available in docker

- docker run ubuntu — Docker commands to create and run container from image ubuntu. after running this container, container immideately goes to exit state

- docker ps (“or” sudo docker container ls) — list all running container and its staus and ids

- docker ps -a (“or” sudo docker container ls -a ) — list all container .. runing or exited

- docker stop container_id “or” container_name (i.e. random name provided by docker) : to stop the running container

- docker rm container_id “or” container_name ( to remove/delete stopped container)

- docker images — list all the images downloaded

- docker rmi image_name — remove/delete image ( stop/delete all dependent container from that image , before deleting the image. )

- docker pull ubuntu — to only pull the image (in this case ubuntu) ( this command unlike run will download image but not run container out of that image)

- docker run Ubuntu sleep 1000 ( in this case container created by ubuntu stays in sleep state for 1000 second before shutdown or exiting )

- docker exec container_id “or” container_name cat /etc/hosts :: this command will execute command in the specified container (And run will execute command in image .. ie. after creating container from the image)

- docker run -it centos bash : to change terminal to newly created container using image centos

- docker run -d centos sleep 20 : run container with image centos and sleeps for 20s and after 20s it is exited or shutdown. -d run the container in the background

- docker inspect container-id ( list the details about the container .. here we can also find IP_address of hte container)

- docker stats container_id or name – performance stats for the conatiner

- docker top container_id or name — list process in container

docker network

docker run ubuntu ( will user network = bridge)

docker run ubuntu –network=none ( will use network = none)

docker run ubuntu –network=host (will use network = host)

docker network create \

–driver bridge \

–subnet 182.18.0.0/16

custom-isolated-network

docker network ls ( to list all the network)

docker network inspect

docker network connect

docker network disconnect

Round-robin

Round Robin DNS is a technique of load distribution, load balancing, or fault-tolerance provisioning multiple, redundant Internet Protocol service hosts, e.g., Web server, FTP servers, by managing the Domain Name System’s (DNS) responses to address requests from client computers according to an appropriate statistical model.

In its simplest implementation, Round-robin DNS works by responding to DNS requests not only with a single potential IP address, but with one out of a list of potential IP addresses corresponding to several servers that host identical services.

— sudo docker network create testingnet

— sudo docker run -d –network testingnet –network-alias searcheng elasticsearch:2

— sudo docker run -d –network testingnet –network-alias searcheng elasticsearch:2

— sudo docker run –rm –net testingnet alpine nslookup searcheng

— sudo docker run –rm –net testingnet centos curl -s searcheng:9200

Docker RUN command

- docker run ubuntu – run latest version of ubuntu

- docker run ubuntu:17.04 – (TAGS) run specific version of ubuntu

- docker run -d ubuntu sleep 2000 — (DETTACH) execute run command in detach mode .. ie command prompt comes back to user

- docker attach ubuntu — (ATTACH) attaches back to the container

- docker run -i ubuntu — (STDIN) listen to standard input

- docker exec container_id bash

- docker run -p 80:5000 testing/simple-webapp — (PORT MAPPINT) 80 is the port of the server and 5000 is the port of the docker container

- docker run -p 8090:5000 testing/simple-webapp — run multiple instances of your app and map to differnt pot on server i.e. docker host

- docker run -p 8306:3306 mysql

- /var/lib/centos — data are stored in this location of centos container

- /var/lib/mysql — data are stored in this location of mysql container

- docker run -v /opt/datadir:/var/lib/mysql mysql — (VOLUME MAPPING) it maps the volume of mysql container so that data is stored in /opt/datadir directory of docker host (i.e. server), instead of /var/lib/mysql directory of the container .. in this case even if container is removed our container data remains with us.

- docker run ubuntu cat /etc/*release*

- docker run -p 8080:8080 -p 50000:50000 -v /your/home:/var/jenkins_home -u techiematerials jenkins (where techiematerials is the user which have access to folder /your/home directoey)

- docker run -it ubuntu bash ( i for standard input and t for opening terminal for the container)

- docker run -it –rm ubuntu bash (rm deletes the container once container is exited)

- docker run -e APP_COLOR=green simple-webapp-color (where e is for environment variable and simple-webapp-color is image name)

docker images command

Dockerfile

——-

FROM Ubuntu

RUN apt-get update

RUN apt-get install python

RUN pip install flask

RUN pip install flask-mysql

COPY . /opt/source-code

ENTRYPOINT FLASK_APP=/opt/source-code/app.py flask run

——–

— docker build . -t testdocker/my-flask-image (testdocker is my account name)

– docker image tag nginx-with-html:latest testing/nginx-with-html:latest

– docker tag testnode testdocker/testing-node

– docker push testdocker/my-flask-image (testdocker is my account name)

Every docker image must be based of other image (i.e. OS ) or self modified OS

- docker history image-name (will show layers of image and size occupied by each image)

- docker build . ( to rebuild docker image from dockerfile)

Steps to dockerize/Containerize application :

1. run all the steps manually in the container step by step and start the app and then note down the step ( update, installlation, code copy, run the app)

2. Then create Dockerfile

3. Build the dockerfile and create image

4. push the image in the dockerhub for future use.

- docker push testdocker/testing-app

- docker login

Dockerfile (name of image ubuntu-sleeper)

FROM ubuntu

ENTRYPOINT [“sleep”]

CMD [“5”]

- docker run ubuntu-sleeper (sleep for 5 secs as default)

- docker run ubuntu-sleeper 15 (takes entrypoint parameter as 15 and sleep for 15 secs)

Docker compose

– steps to install docker-compose:

- sudo yum install -y epel-release

- sudo yum install -y python-pip

- sudo pip install docker-compose

- sudo yum upgrade python*

To install python in ubuntu :

sudo curl -L “https://github.com/docker/compose/releases/download/1.24.0/docker-compose-$(uname -s)-$(uname -m)” -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

- docker-compose version

- sudo docker-compose version

– docker-compose.yml

services:

web:

image: “testing/simple-webapp”

ports:

– “80:5000”

database:

image: “mysql”

volumes:

– /opt/data:/var/lib/mysql

– docker-compose up (this command will create two container one “testing/simple-webapp” and other “mysql”)

– doker-compose stop (stops the container )

– docker-compose down (will stop and delete the containers)

-docker-compose.yml file to deploy a wordpress application using Docker. The wordpress application consists of webserver and database server. for web we user wordpress image and for database server we use mysql image.

docker-compose.yml

version: ‘3’

services:

mysql:

image: “mysql”

environment:

– MYSQL_ROOT_PASSWORD=password

volumes:

– “/data/mysql:/var/lib/mysql”

web:

image: “wordpress”

ports:

– “8080:80”

environment:

– WORDPRESS_DB_PASSWORD=password

How do you connect docker daemon with docker engine?

Using – docker CLI

Explain various types of Docker Volumes: Why, When, and Which Ones?

Docker volumes are used to persist data from within a Docker container. There are a few different types of Docker volumes: host, anonymous, and, named.

Why Docker Volumes?

Docker volumes are the preferred way to save data over restarts of a Docker container. When compared to bind mounts, here are some of the advantages of volumes:

- Volumes are easier to back up and migrate.

- Managing volumes can be done from the Docker CLI or Docker API.

- They work on Linux and Windows.

- Volumes are safer to share among containers.

- Volume drivers allow volumes to be stored on remote hosts or cloud providers or to be encrypted.

- New volumes can be pre-populated by the container.

- Volumes do not increase the size of the container.

When to Use Each Volume

There are a few different types of volumes, each with its own purpose and benefits.

Host volumes

A host volume can be accessed from within a Docker container and is stored on the host, as per the name. To create a host volume, run:

docker run -v /path/on/host:/path/in/container

I suggest using a host volume when you need to know where to refer to the data. It’s also the easiest type of volume to use, so it’s ideal for simple projects.

Anonymous volumes

The location of anonymous volumes is managed by Docker. Note that it can be difficult to refer to the same volume when it is anonymous. To create an anonymous volume, run:

docker run -v /path/in/container …

Anonymous volumes provide flexibility, but they aren’t used as often now that named volumes have been introduced.

Named volumes

Named volumes and anonymous volumes are similar in that Docker manages where they are located. However, as you might guess, named volumes can be referred to by specific names. To create a named volume, run:

docker volume create somevolumename

docker run -v name:/path/in/container …

Like anonymous volumes, named volumes provide flexibility, but they are also explicit. This makes them easier to manage.

You can also specify Docker volumes in your docker-compose.yaml file using the same syntax as the examples above. Here’s an example of a named volume I used in a WordPress Docker container.

YAML

volumes:

– db_data:/var/lib/mysql

Volumes are helpful for saving data across restarts of your Docker containers. If you need to use a volume, consider the difference between the various kinds before starting development. This will make it easier to save data from the start and specify your volume in your Dockerfile.